Create a self-service app and API delivery platform with composable Traffic Policy

When delivering APIs to production, you almost always have one team responsible for the resiliency and security of the all-important “front door”—you know, the magical chain of networking and infrastructure that resolves example.com into the API service you’re trying to deliver.

Whether they go by infra, DevOps, platform, or something else entirely, these folks set the security and resiliency policy to safeguard this front door. They get to define and enforce a fundamental question: What is allowed to enter and leave?

These engineers need that control to keep services online and protected, but it’s also inflexible. The developers who are building API services that operate behind that front door aren’t able to change how it works—instead, they need to file tickets for every change, like setting up a URL rewrite from api.example.com/v1 to api.example.com/v2, for the infra team to handle.

The balance between control and flexibility isn’t quite right. It creates blockers, backlogs, and frustration.

As we’ve been feverishly building out ngrok’s API gateway, we asked: What if infrastructure teams could maintain control ahead of their API’s front door but give development teams access to all API gateway functionality behind it? What if they could provision this quickly, enable their developer peers, and not cede any control over what’s allowed to enter and leave?

That’s now possible using our latest primitives, like internal endpoints, cloud endpoints, and Traffic Policy. With composability, each team can manage traffic on their respective side of the front door, with a clearly defined interface between them, with all the autonomy and flexibility they need.

How does composability help developers self-service and ship their APIs?

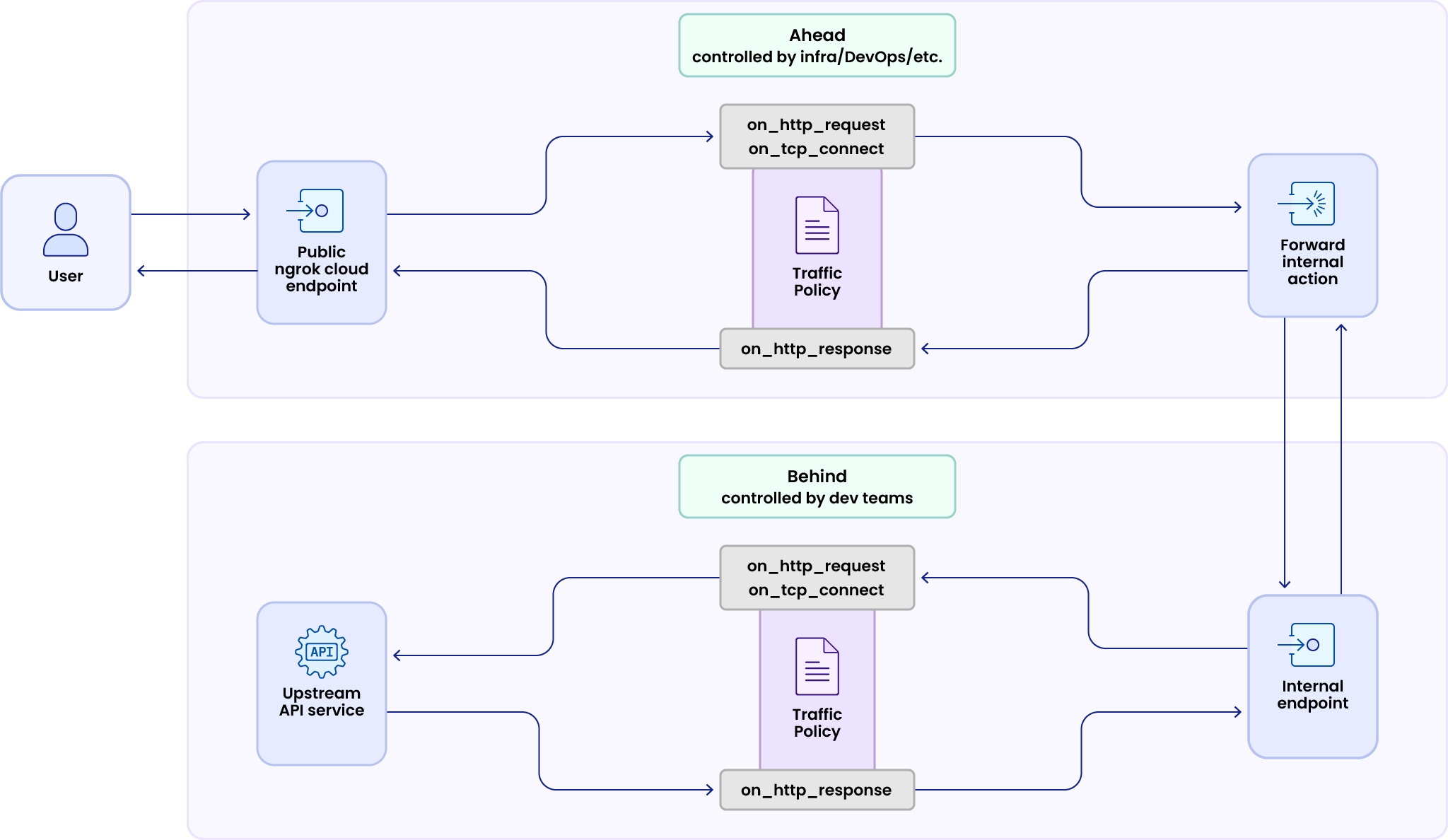

When you configure ngrok as an API gateway, there are multiple key moments in the lifecycle of a request where one can manipulate traffic using Traffic Policy. Every time a request enters an endpoint (public or internal), there’s a new opportunity to run Traffic Policy rules based on the quality of said request.

Here’s a stripped-down version of that request lifecycle through ngrok’s API gateway with a public and internal endpoint, continuing this theme of what happens ahead vs. behind ngrok’s API gateway as a front door:

With this architecture, infrastructure engineers control what’s ahead of the front door on the public cloud endpoint, then give app/API developers access to what’s behind—the internal endpoint. Both endpoints can apply Traffic Policy rules and actions, which means teams have access to the full functionality of ngrok’s API gateway.

But because Traffic Policy rules will always run on public endpoints first, infrastructure engineers can still control security and resiliency on a global level. Developers then compose—not compromise—new traffic management policies onto what you’ve already built.

No matter whether you wrangle infrastructure or application code, you win out:

- Infra teams get reusability, in that a single cloud endpoint contains all common Traffic Policy rules to use for all services and minimizes configuration sprawl.

- Infra teams also get better encapsulation of components, keeping rules close to the services they apply to, which helps them maintain correctness as systems grow larger.

- Dev teams get flexibility to swap out or reconfiguration rules behind the front door without disrupting the entire system, like you might get with inheritance.

- Both teams get more maintainability, in that these modular components are easier to grok, debug, and optimize when the time comes.

That’s all made possible by your ability to compose multiple policies through multiple endpoints.

Example: Infra-controlled rate limits, self-service logging and redirects on a single public endpoint

To start, you need to set up the ngrok API gateway with the security control you care most about: JWT validation, which ensures only authenticated users can access any APIs behind your gateway. As someone responsible for provisioning and maintaining infrastructure, you start by creating a cloud endpoint.

If you haven't yet reserved a domain on ngrok, start there.

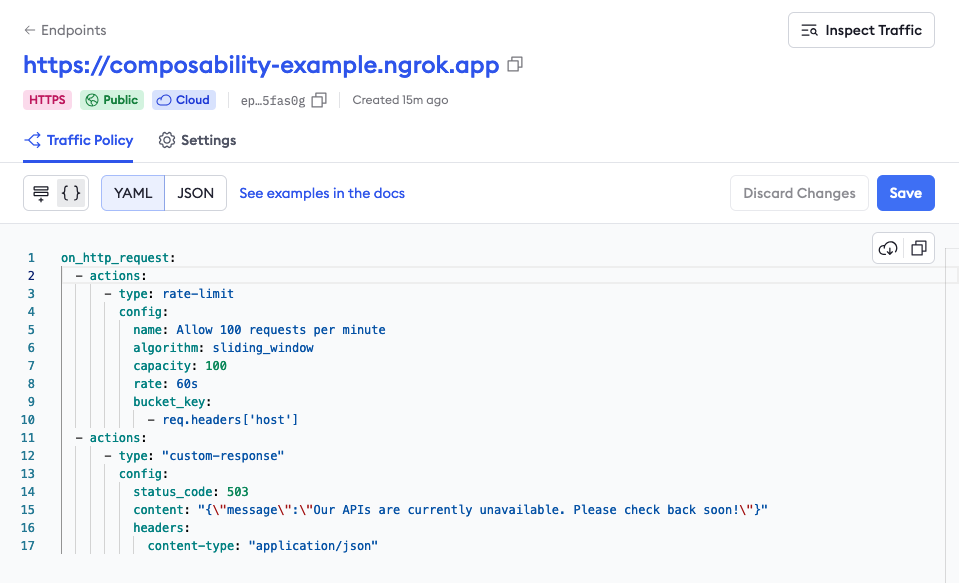

Head over to the Endpoints section of your ngrok dashboard, then click + New and New Cloud Endpoint. Leave the binding as Public, then enter your reserved domain name. When you see the Traffic Policy editor, you can add add a set of rules that enforce a global rate limiting policy and a temporary custom response action that terminates every request with a 503 error code.

on_http_request:

- actions:

- type: rate-limit

config:

name: Allow 100 requests per minute

algorithm: sliding_window

capacity: 100

rate: 60s

bucket_key:

- req.headers['host']

- actions:

- type: custom-response

config:

status_code: 503

body: "{\"message\":\"Our APIs are currently unavailable. Please check back soon!\"}"

headers:

content-type: "application/json"Your cloud endpoint would look similar to this:

When you access the domain associated with your cloud endpoint (feel free to try https://composability-example.ngrok.app and hit the rate limit if you dare!), you'll see the custom response error code.

Later, one of your developer peers sticks you with a new ticket: time to bring their newest API to production! They have two primary concerns at the moment:

- Get their API service online as quickly as possible.

- Collect logs for failed responses to help with debugging.

You help them create a new file called foo-api.yaml with the following Traffic Policy rule, which uses the log action and some CEL interpolation to enrich the logging data.

on_http_response:

- expressions:

- "res.status_code < '200' && res.status_code >= '300'"

actions:

- type: log

config:

metadata:

message: "Unsuccessful request"

time: "${time.now}"

route: "${req.url.path}"

endpoint_id: "${endpoint.id}"

success: false

Together, you use the ngrok agent to create a new internal endpoint on foo.api.internal.

ngrok http 4000 --url https://foo.api.internal --traffic-policy-file foo-api.yaml

Your internal endpoint is running, but it’s currently not accessible—time to fix that on your cloud endpoint and global API gateway configuration. Open up your endpoint again in the ngrok dashboard and add a new forward-internal rule to route all traffic arriving on the hostname foo.api.example.com to foo.api.internal.

---

on_http_request:

- actions:

- type: rate-limit

...

- expressions:

- "req.url.host == 'foo.api.example.com'"

actions:

- type: forward-internal

config:

url: https://foo.api.internal

- actions:

- type: custom-response

...As soon as you hit Save in the ngrok dashoard, their API service is available publicly on foo.api.example.com.

Every request that hits your ngrok API gateway first passes through your public endpoint, which runs the JWT validation. If that rule succeeds, then ngrok forwards the request to the internal endpoint, which composes the logging rule on top. Both you and your API developer peer have full access to the functionality you expect from an API gateway, but as the infrastructure engineer, you ultimately remain in control of what requests are and are not allowed through.

You started with JWT validation, but that could also include JWT validation, IP restrictions, geo-blocking, and any other security or resilience controls you require.

Okay, that’s all great—but you essentially walked your development peers through the entire setup. Won’t they just need your help again when they need to change their side of the ngrok API gateway?

With composability, the answer is no… if they’re a self-starter and can read some docs.

Luckily for you, they are, and after moving functionality to a new route and discussing when to use a redirect or rewrite, they’re ready to add a redirect action. They edit their foo.api.yaml file to implement the rule to not break users’ existing API calls.

on_http_request:

- expressions:

- "req.url.path.startsWith('/users')"

actions:

- type: url-rewrite

config:

from: "/users?id=([0-9]+)"

to: "/users/$1/"

on_http_response:

- expressions:

- "res.status_code < '200' && res.status_code >= '300'"

name: "Log unsuccessful requests"

...

With the Traffic Policy rule saved, they can bring their internal endpoint back online, self-serving an essential change to their API gateway without any tickets and without circumventing any of the security policies you control.

The next time the dev team is ready to bring a new API online, you can fully support their needs by adding another forward internal action to your existing cloud endpoint—or, you can make your global policy even more robust by using CEL interpolation to create dynamic, scalable routing.

---

on_http_request:

- actions:

- type: rate-limit

...

- actions:

- type: forward-internal

config:

# redirect from https://*.api.example.com to https://<SUBDOMAIN>.api.internal

url: https://${req.host.split('.example.com)[0]}.internal

- actions:

- type: custom-response

...Time to bring composability to your API gateway

To launch the ngrok API gateway using a similar combination of public and internal endpoints, check out our get started guide. That walks you end-to-end through using an example API service, creating endpoints, and managing APIs with multiple routing topologies.

If your infra team has already gone down the K8s rabbit hole, then a similar guide for shipping ngrok’s API gateway on K8s clusters might be more down your alley? You’ll use the Gateway API for a similar splitting of configuration based on the different personas of who maintains the cluster and who ships APIs to it.

Along the way, be sure to grab a free ngrok account to make all your endpoints and composed Traffic Policy rules accessible to the public internet.

For your questions, concerns, and feature requests, find us on one of these two routes:

- Create an issue in the ngrok community repo.

- Sign up for the next Office Hours livestream and get an answer straight from our DevRel and Product folks.